Our Approach

Research

First off, we aimed to understand our stakeholders, users and existing solutions. To holistically understand the problem space and our user needs, our team created a research plan that incorporated the following methods.

We decided to divide our research into two phases: a primary research phase where we understood the context of the problem through an in-depth literature review and competitive analysis followed by a secondary research phase where we distributed an initial survey to 400 participants and subsequently conducted 8 user interviews. We synthesized the collected data into an affinity map that helped us build personas, empathy maps, and design concepts.

Literature Review

We performed an in-depth literature review to learn about meditation and the role it plays in counseling. We looked at research that studied the effects of employing mindfulness techniques for therapy and studied the benefits of tracking client progress to improve individual care.

Competitive Analysis

We also looked at existing apps in the market, such as Tergar and Buddhify, to get a better understanding of the commercial work being done in the mindfulness space. These solutions mainly tracked the quantitative aspects of meditation with descriptive statistics. However, in order to provide meaningful insights to counselors, we decided that we need to delve deeper and look into more sophisticated data analysis methods.

Establishing the Problem Statement

Upon reviewing existing literature and solutions, we met with our industry partner to establish project requirements and discuss how they would align with our team goals. At this stage, we identified our target users and stakeholders, and came up with a list of central questions that would guide our research moving forward.

Given the central questions displayed above and an in-depth literature review on the role meditation plays in counseling, we arrived at the refined problem statement displayed below:

“ZenVR wants to define the relevant characteristics of client progress on their meditation modules. Identifying these characteristics will enable counselors to track clients’ meditation journey and incorporate key insights into future counseling plans.”

Surveys

We decided to start our data collection with surveys because we wanted to get a general understanding of the scope and extent to which counselors track client’s progress on meditation.

We sent out over 350 emails to counselors across the country, inviting them to participate in our survey. In total, we received ~30 responses, which was close to the expected 10% response rate.

Semi-Structured Interviews

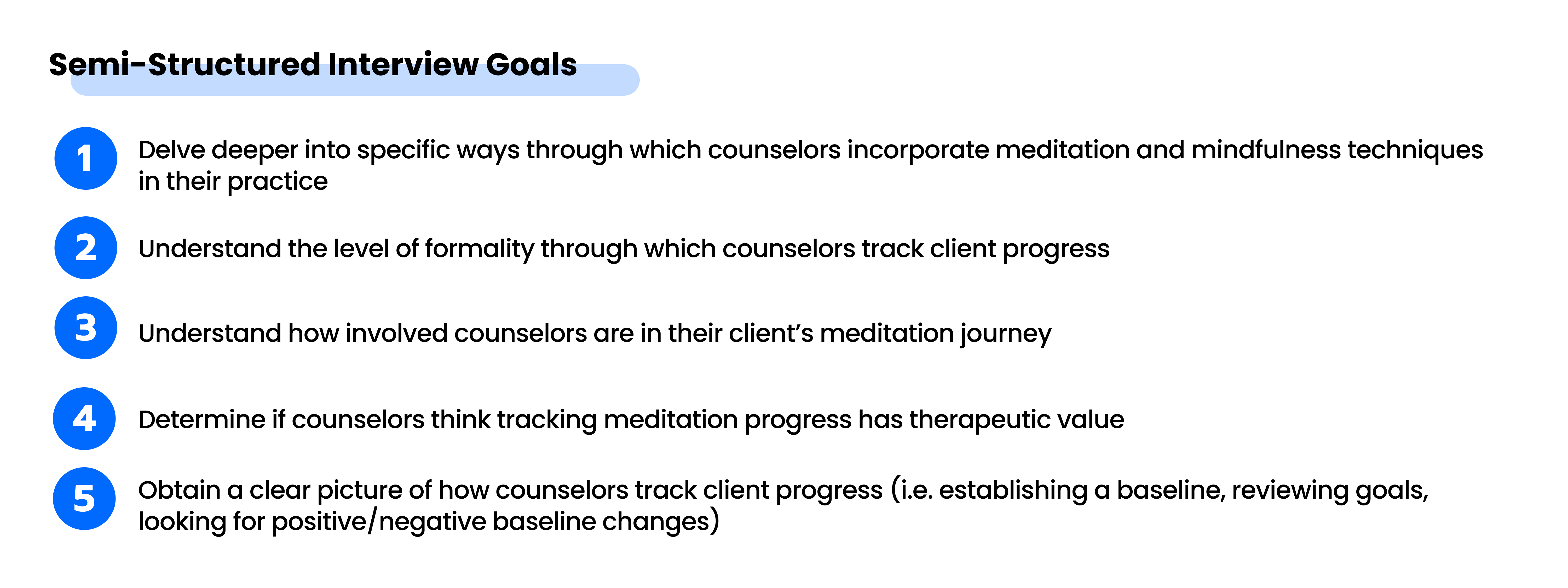

Once we gathered an array of high-level quantitative and qualitative insights from our survey, we started to design our semi-structured interviews to gain more in-depth information about the habits, attitudes, and preferences of counselors regarding meditation tracking.

Initially, we only wanted to interview college counselors who specialize in mindfulness and meditation therapy. However, we broadened our scope to include any counselors or therapists that integrate meditation or mindfulness-based techniques into their practice.

In total, we conducted eight 60-minute semi-structured interviews with counselors.

In order to gather a set of rich and comprehensive data, the team decided to delve into questions that specifically focused on understanding the role of mindfulness and meditation in therapy. The final set of questions used to conduct our semi-structured interviews are listed below:

- What is your role in the counseling center?

- How many clients do you interact with each day?

- How long is a typical session?

- Can you walk us through a typical session with a client?

- How do you incorporate meditation and mindfulness into your sessions?

- Do you track your clients’ progress? If so, what information do you currently track about your client?

- How often do you review a client’s progress?

- Do you recommend any meditation or mindfulness applications to your clients?

- What do you hope for your clients to get out of meditation?

- What kind of information is useful to know about your client’s meditation journey?

- How do you use that information to inform your therapy?

- How do you know if meditation is positively/negatively affecting your client?

- How do you define positive and negative change?

Mindmap of Final Interview Questions.

This was by far the most valuable research activity we conducted, since it gave us an in-depth understanding into the needs and preferences of counselors.

Analysis

Upon completing our interviews, we came together as a team to organize and analyze our findings through a series of interpretation sessions. We synthesized the collected data into an affinity map to help identify themes, form insights, and brainstorm design ideas to solve higher order problems. The following are some of the specific themes we looked for during out interpretation sessions.

After 14 long hours of transcribing ~500 notes and digitizing the affinity map.

We generated 60+ notes for each interview, yielding a total of ~500 notes for all eight interviews combined. While it was assuring to find patterns that supported our hypotheses, we also paid close attention to emerging ideas that challenged our assumptions. We specifically looked for contrasting opinions and approaches to progress tracking to identify key differences within the field.

Findings

Based on the affinity map, we generated the following insights by grouping similar notes under similar headings.

Storyboards, User Personas, and Empathy Maps

Using our acquired user needs and design implications, we developed storyboards, user personas, and empathy maps to tell a story from the data.

Storyboards

Based on our findings, we created two storyboards, the first focusing on how counselors take patient notes and use them to track their progress and the second focusing on how counselors incorporate meditation and mindfulness techniques into their practice and how they track this progress.

Personas

We then created three personas that captured the essence of our users and their characteristics. We also formed empathy maps and journey maps to understand their needs and frustrations.

User Personas (left to right): Marlene, Robert, and Viola

Empathy Maps

Empathy Maps (left to right): Marlene and Viola

Journey Map

Journey Map for Marlene